Features

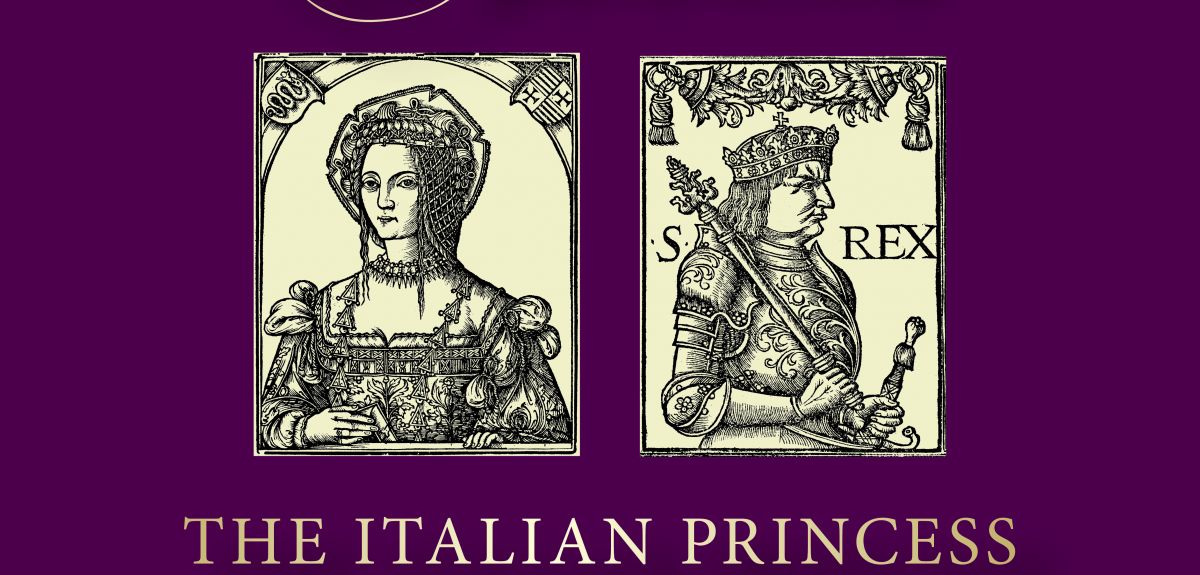

Tomorrow, 18 April 2018, marks the 500th anniversary of one of history’s most iconic royal love stories: the marriage of the Italian Princess Bona Sforza to King Sigismund of Poland, Grand Duke of Lithuania.

In celebration, the Weston Library - part of Oxford’s Bodleian Library, is hosting the exhibition A Renaissance Royal Wedding. The display chronicles the unexpected union of the two royals from different cultures, and the Italian-Polish connections that developed as a result.

On 18 April 1518, the two were married in Cracow cathedral, Poland. Their lavish wedding was attended by dignitaries and scholars from across Christendomn. The relationship underpinned links of politics and kinship between the two countries, that evolved into a dynamic exchange of people, books and ideas which continued for decades, in a story still unfamiliar to many scholars and students of the sixteenth century.

An Italian ruler in her own right, Bona Sforza (1494-1557) was born a Milanese-Neapolitan princess and went on to be queen of Poland through marriage, before she became duchess of Bari, Puglia in 1524. By contrast, King Sigismund (1467-1548) was the scion of a large royal house which, at its peak (c. 1525), ruled half of Europe, from Prague to Smolensk. Their five children – who later ruled in Poland-Lithuania, Sweden and Hungary - presented themselves throughout their lives as Polish-Italian royalty. To this day, Queen Bona Sforza remains a high-profile - if controversial, figure in Polish history.

The Bodleian exhibition showcases Oxford’s exceptional collections relating to early modern Poland and Italy. Highlights include, Queen Bona Sforza’s own prayer-book – very rarely displayed in the UK. The book is a tiny treasure of Central European manuscript illumination, painted by the Cracow master and monk Stanisław Samostrzelnik, and decorated throughout with her Sforza coat of arms. Other objects in the display include an account of Bona’s bridal journey across Europe (the first book ever printed in Bari), a Ferraran medal of Bona from the Ashmolean’s collections, and early 16th-century orations, chronicles and verse produced in both Cracow and Naples testifying to the Italian-Jagiellonian connection.

The display, curated by Natalia Nowakowska and Katarzyna Kosior, of Oxford’s History Faculty, is part of a European Research Council (ERC) funded project, entitled Jagiellonians: Dynasty, Memory and Identity in Central Europe. To accompany the display, a public lecture and international conference on Renaissance royal weddings, with speakers from 13 countries, will be held over the coming weeks at the University (dates tbc).

A Renaissance Royal Wedding is running at the Weston Library, Broad Street, Oxford, until May 13 2018

Dr Molly Grace, NERC Knowledge Exchange Fellow in the Oxford University Department of Zoology, discusses the potential impact of IUCN Green Species List, a framework for a standard way of measuring conservation success. A project that she and the team at the Interdisciplinary Centre for Conservation Science played a key role in developing.

What is the goal of species conservation? Many would say that it is to prevent extinctions. However, while this is a necessary first step, conservationists have long recognized that it should not be the end goal. Once a species is stabilised, we can then turn our attention to the business of recovery - trying to restore species as functional parts of the ecosystems from which humans have displaced them. However, to do this, there must be a rigorous and objective way to measure recovery.

Imagine this scenario: A species is teetering on the brink of extinction. In fact, it has been classified on the IUCN Red List of Threatened Species (the global standard for measuring extinction risk) as Critically Endangered. You rally a global team of scientists, conservation planners, and land managers to put their heads together and figure out how to save this species. This team works relentlessly to bring this species back from the edge, and little by little, the species improves. After years, or even decades, of work, the team achieves its goal— the species is no longer considered threatened with a risk of extinction! However, no one is celebrating—in fact, the mood has become decidedly sombre.

There is a simple reason for this apparent paradox, due to limited conservation budgets, species which are classified as being at risk of extinction are preferentially awarded funding. While this makes sense at a wide scale - of course we should be working hardest to save the species which face an imminent risk of vanishing from the planet - it poses a problem for species who have benefited from concerted conservation actions and are no longer in the “danger zone.” Once the threatened classification vanishes, often so does funding. Without continued protections, species may slip back into the threatened category, nullifying the effect of decades of work. Thus, there is a perverse incentive to stay in the exclusive “highly endangered” club - at least on paper. But this prevents us from celebrating the huge difference that conservation can make.

With the creation of the IUCN Green List of Species, we hope to reverse this perverse incentive to downplay conservation success. The Green List, still in development, will assess species recovery and how conservation actions have contributed to species recovery. It will also calculate the dependence of the species on continued conservation, by estimating what would happen if these efforts stopped. This can be used as an argument for continued conservation funding. With the Green List working in tandem, we can stop thinking of Red List “downlisting”— moving from a high category of extinction risk, to a lower one—as a demotion which disincentivises funding, but rather see it for what it truly is: a promotion which should be celebrated. The framework would be applicable across all forms of life on the planet: aquatic and terrestrial species, plant, animal, and fungal species, narrow endemics to wide-ranging species, you name it.

In our recent paper, we presented this framework, which will potentially measure recovery and work in tandem with the assessment of extinction risk (IUCN Red List) to tell the story of a species. For example, a species that is in no danger of disappearing from the planet (Red List assessment) might nonetheless be absent from many parts of the world in which it was previously found, and so cannot be considered fully recovered (Green List assessment). The local loss of a species can have cascading effects on the rest of the ecosystem.

The Green List of Species also assesses the impact that conservation efforts have had, and could have in the future. For example, the charismatic saiga antelope (Saiga tartarica), found throughout Central Asia, is currently considered “Critically Endangered” on the Red List. However, our Green List assessment shows that in the absence of past conservation efforts, many more populations would be extinct or in worse shape today. We also show that with continued conservation, the saiga's future prospects are bright—a low risk of extinction, reestablishment of populations where they are locally extinct, and some functional populations.

We hope that the Green List of Species will help to encourage and incentivise more ambitious conservation goals, moving beyond triage at the edge of extinction.

JRR Tolkien and Susan Cooper; CS Lewis and Diana Wynne Jones. Oxford will always be associated with the greats of fantasy writing – and now the genre is being placed centre stage, courtesy of a new University-run summer school that will allow members of the public to explore this often-overlooked branch of literature.

Titled 'Here Be Dragons', the summer school will run from 11-13 September and is also aimed at prospective Oxford students with an interest in the fantasy genre.

Academics from Oxford's Faculty of English and invited speakers will present a series of talks on the history of fantasy literature, the major writers, and cross-cutting themes.

Organiser Dr Stuart Lee, from Oxford's Faculty of English, said: 'Oxford is the natural home for a summer school on fantasy literature. Many of the great British writers taught or studied here, and the English Faculty is building up an international research profile in the genre.

'If you are interested in fantasy literature – where it came from, what inspired the major writers, how to study it – then this is the school for you.'

The registration fee for the summer school (£200, or £150 for students) covers attendance and catering. For further information, see the full programme or the booking page.

The summer school coincides with a major free exhibition running throughout the summer at the Bodleian Libraries, titled Tolkien: Maker of Middle-earth.

The exhibition explores Tolkien's legacy, from his genius as an artist, poet, linguist and author to his academic career and private life. Visitors will be taken on a journey through Tolkien's most famous works, The Hobbit and The Lord of The Rings, encountering an array of draft manuscripts, striking illustrations and maps drawn for his publications. Objects on display include Tolkien's early abstract paintings, the touching tales he wrote for his children, rare objects that belonged to Tolkien, exclusive fan mail, and private letters.

The exhibition runs from 1 June to 28 October.

What are the new 'one-stop shops' for less obvious cancer symptoms, and how is this service being developed and evaluated in Oxfordshire? GP and Clinical Researcher Dr Brian D Nicholson, from the Nuffield Department of Primary Care Health Sciences, is part of the team who developed the region’s pilot site, one of ten across the country, and explains why understanding non-specific symptoms is important.

Last week the New Scientist reported 'around half of people with cancer have vague or non-specific symptoms, such as loss of appetite or weight. As a result, they can end up being referred to several specialists before receiving a diagnosis.'

The Telegraph added 'new "one-stop shops" to speed up cancer diagnosis are being trialled across the country for the first time. GPs can refer patients suffering from "vague" symptoms… to undergo multiple tests for different cancers.'

Cancer Research UK’s director of early diagnosis, Sara Hiom, was quoted: 'We’re confident that these ten pilot centres will give us a much better understanding of what’s needed to speed up the diagnosis and treatment of people with less obvious symptoms, improve their experience of care and, ultimately, survival.'

The public response was mixed, ranging from outrage over another postcode lottery of NHS resource allocation, to reminders about the initiative’s nature as a pilot which is subject to evaluation before being rolled out nationally.

I’m part of a team of GPs and health researchers at the University of Oxford’s Nuffield Department of Primary Care Health Sciences (NDPCHS) who conduct research into non-specific cancer symptoms and developed the “one-stop shop” pilot site for Oxfordshire. Known as the Suspected CANcer (SCAN) pathway, we’ve developed this in close collaboration with clinicians, researchers, commissioners, and heath care professionals from the Oxfordshire Clinical Commissioning Group and Oxford University Hospitals NHS Foundation Trust (OUH).

Our site is one of ten diagnostic centre pilot sites set-up by local health care teams as part of the Accelerate Coordinate Evaluate (ACE) programme, funded by NHS England, Cancer Research UK and Macmillan.

Why non-specific symptoms?

Specific cancer symptoms point to cancer of a specific body location. For example, coughing up blood can be linked with lung cancer, while difficulty swallowing can be linked with cancer of the food pipe (the oesophagus). Specific symptoms give a clear signal to GPs about where in the body to perform tests for cancer, and the NHS provides GPs with rapid access to tests.

Non-specific cancer symptoms can be more challenging for GPs and include tiredness, loss of appetite, tummy pain, feeling generally unwell and weight loss. They are more common and are linked with several non-cancer (benign), long-term and short-term conditions seen by many GPs. What is more challenging is that non-specific symptoms can also be linked with cancer of more than one part of the body, making it more difficult for GPs to choose the most appropriate investigation to perform first.

The NHS, until now, has not been set-up to provide GPs with access to rapid tests to urgently investigate these non-specific symptoms across different parts of the body.

Weight loss, for example, is linked with ten different types of cancer and is the second most common symptom of colorectal, lung, pancreatic and kidney cancer. Our latest research on weight-loss shows that in the over 60s, the risk of cancer in patients presenting with weight loss is higher than previously thought (BJGP Link), so systems that fast-track investigations of unexplained weight loss to either diagnose or rule-out cancer earlier are urgently needed.

Ten centres, ten different approaches

The newly-launched cancer diagnostic initiative has been developed to evaluate what sort of 'one-stop shop' could be effective at diagnosing cancer in the NHS, building on similar clinics used in Denmark. This has taken a lot of time and careful organisation as each of the ten pilot sites have developed a unique “one-stop shop” approach that can be provided in their local areas. To learn the most about what makes an effective “one-stop shop”, the pilot sites were chosen in urban and rural locations, richer and poorer areas, they use different combinations of health professionals and local services, accept a range of non-specific symptoms, and use a variety of different tests.

Oxford’s one-stop-shop for cancer diagnosis

Our local pilot, SCAN, allows all GPs in Oxfordshire to refer patients aged 40 years or over if there is no other urgent referral pathway available, and if they are concerned about cancer or serious disease following a face-to-face consultation for a range of non-specific symptoms. These include: unexplained weight loss, severe unexplained fatigue, persistent nausea or appetite loss, new atypical pain, or unexplained results from a laboratory test. GPs may also refer if they have clinical suspicion of cancer or serious disease, or a 'gut feeling’ that their patient warrants investigation.

All patients referred to SCAN undergo a broad panel of laboratory blood and faecal tests and low-dose Computed Tomography (CT) imaging of the chest abdomen and pelvis. Depending on the results, they may then be referred to the SCAN clinic, where clinicians with expertise in evaluating non-specific symptoms evaluate them in more depth – they’ll be guided through the process by a specialist radiographer, known as the SCAN navigator. Depending on the results of the initial tests, the patient may otherwise get a rapid referral to another cancer pathway, or for a different serious disease.

Evaluating effectiveness, first and foremost

Importantly this initiative is collecting data, with patients’ consent, to evaluate each “one-stop shop” comprehensively. As more patients referred to these pilot sites grows and we collect more data, we will be able to determine what an effective service for patients with non-specific symptoms could look like in the NHS. Once the model has been refined, these one-stop shops will be expanded and made available right across the NHS so that all patients have the opportunity to access rapid investigation via their GP to speed up the diagnosis and treatment of their non-specific symptoms .

In the final part of our women in AI series, Dr Vidya Narayanan, a researcher at the Oxford Internet Institute and post-doctoral researcher on the Computational Propaganda Research Project, discusses her work understanding the effects of technology and social media on political processes in the United States and in the UK.

What is AI (In your own personal view)?

In my view, Artificial Intelligence is the ability of computer programs to make independent decisions with little or no human intervention and to adapt to new situations.

AI has been a subject for intense speculation and rigorous academic study since the 1950s, when Alan Turing asked if machines could think. While the theory of AI has seen continuous development since then, it’s only in the past decade, with our access to vast stores of data and the advent of graphics processing units that can process this data in parallel, that applications of AI seem finally ready to step out of the pages of science fiction books and have a profound impact on our everyday lives.

What are the biggest AI misconceptions that you have encountered?

As with any relatively new and powerful technology, AI too has the power to split opinion among technocrats, policy makers and the general public. To me, it feels as though we still lack the evidence to categorise specific notions about AI as misconceptions. There is little consensus among academicians and other AI researchers on a timeline for the development of Artificial General Intelligence (AGI) – a level of intelligence that allows a computer to handle any intellectual task that a human can. The onus is on us, as academicians, to continually assess the state of art of AI and communicate these findings in an accessible manner to the general public and build an informed consensus about AI among people.

What do you think can be done to encourage more women into AI and what has your own personal experience been in the field? Dr Vidya Narayanan is a post doctoral researcher at the Oxford Internet Institute

Image credit: OU

Dr Vidya Narayanan is a post doctoral researcher at the Oxford Internet Institute

Image credit: OU

This is a very important question and one that concerns me vitally as both as a woman and as a mother. The place to start is at school and work towards creating an environment where girls can interact with technology in a peer group setting. It’s vital to encourage them to think that they can be both consumers as well as developers of technology. I have been very fortunate to have worked with very supportive colleagues and mentors and have had an extremely positive experience working as a woman in AI. On a personal level, I would like to support endeavours to create such conducive work environments for women across the globe, particularly in technology.

What drew you towards a career in AI?

Back in the day when I was a graduate student at Pennsylvania State University in the US, the research team were working on decision-making problems in distributed systems that couldn’t be solved by conventional optimisation techniques. The paradigm of multi-agent systems that use reinforcement learning techniques to make decisions in dynamic and uncertain environments were among the various techniques we considered. This was my introduction to Artificial Intelligence and I moved to the UK to pursue a Phd in Computer Science in the Intelligence, Agents and Multimedia lab at Southampton, which was doing pioneering work in the area. Since then, I have been acutely aware of the immense potential of AI to kick start a new technological revolution and change our lives.

As a scientist, I wished to play an active part in creating some of these methods and this drew me towards a career in AI. More recently, I have been motivated by a need to use AI for social good and harness its capacity to solve some of the most pressing problems in the world, including equitable sharing of resources across the globe, examining the impact of social media interactions on democratic processes, the effect of private companies acquiring vast amounts of personal data and the potential for this to be misused by political campaigners - particularly in fragile democracies.

What research are you most proud of?

I returned to academia in November 2017 after a career break to care for my young children. Since then I have joined the Computational Propaganda project, exploring the role of social networks in spreading fake news and influencing electoral processes around the world.

My colleagues and I have been studying the effects of technology and social media on political processes both in the US and in the UK. In particular we have looked at bot activity on Twitter during the Brexit referendum and the spread of junk news among audience groups on both Twitter and Facebook. This is a fascinating area that brings together the disciplines of Political Science, Sociology and Computer Science, to strengthen democratic processes. I’m very motivated to extend this study by creating and using state of the art technologies to study political polarisation, junk news spread on social media platforms and misinformation campaigns by state and non-state actors to influence elections around the world.

What are the biggest challenges facing the field?

The biggest challenges for the field are to address the risks of AI which are very well documented. For example; disruption to jobs, wealth creation for a few individuals widening the social and economic divides and the issue of most of the innovation in AI being driven by private companies. I also think there is a need for policymakers to regulate the development of AI, so that we can make algorithms accountable and rid automated decision-making systems of inherent biases against sub-populations. We need to really harness the power of AI to create egalitarian societies around the world.

What motivates you most in your research?

I enjoy the challenge of using mathematical techniques, computer science and data sets to find solutions to real life problems. I’m acutely conscious of the fact that while in some parts of the world we are on the brink a ‘Fourth Industrial revolution’, there are others who haven’t benefited even from the first industrial revolution and lack access to food, water and electricity. My primary motivation is to build AI powered applications that address these issues by developing fundamental advances in the theory of AI as a computer scientist at Oxford University.

Who inspires you?

There are a number of people who inspire me: Ada Lovelace, Bertrand Russell, Martin Luther King and Alan Turing. I also loved the film Hidden Figures; the true story of Katherine Jonson, Dorothy Vaughan and Mary Jackson, three brilliant African-American women that worked at NASA and played a key role in the space race, getting John Glenn to orbit the Earth. It has a brilliant cast (Janelle Monae, Taraji P. Henson and Octavia Spencer) and is incredibly inspirational – particularly for women in STEM.

FIND OUT MORE ABOUT THE COMPUTATIONAL PROPAGANDA RESEARCH PROJECT

- ‹ previous

- 77 of 253

- next ›

What Louise Thompson’s campaign tells us about the national maternity crisis

What Louise Thompson’s campaign tells us about the national maternity crisis Celebrating 25 Years of Clarendon

Celebrating 25 Years of Clarendon  Learning for peace: global governance education at Oxford

Learning for peace: global governance education at Oxford  What US intervention could mean for displaced Venezuelans

What US intervention could mean for displaced Venezuelans  10 years on: The Oxford learning centre making an impact

10 years on: The Oxford learning centre making an impact Oxford and The Brilliant Club: inspiring the next generation of scholars

Oxford and The Brilliant Club: inspiring the next generation of scholars New course launched for the next generation of creative translators

New course launched for the next generation of creative translators The art of translation – raising the profile of languages in schools

The art of translation – raising the profile of languages in schools