Features

Oxford University scientists carry out clinical trials for a range of medical conditions every year. The hope with each one is that it could lead to a viable treatment to cure or alleviate that condition. It is easy, therefore, to think that a successful trial is one that produces such a treatment, while any other result is a failure. Not so, as a recent study from Oxford's researchers in South East Asia shows.

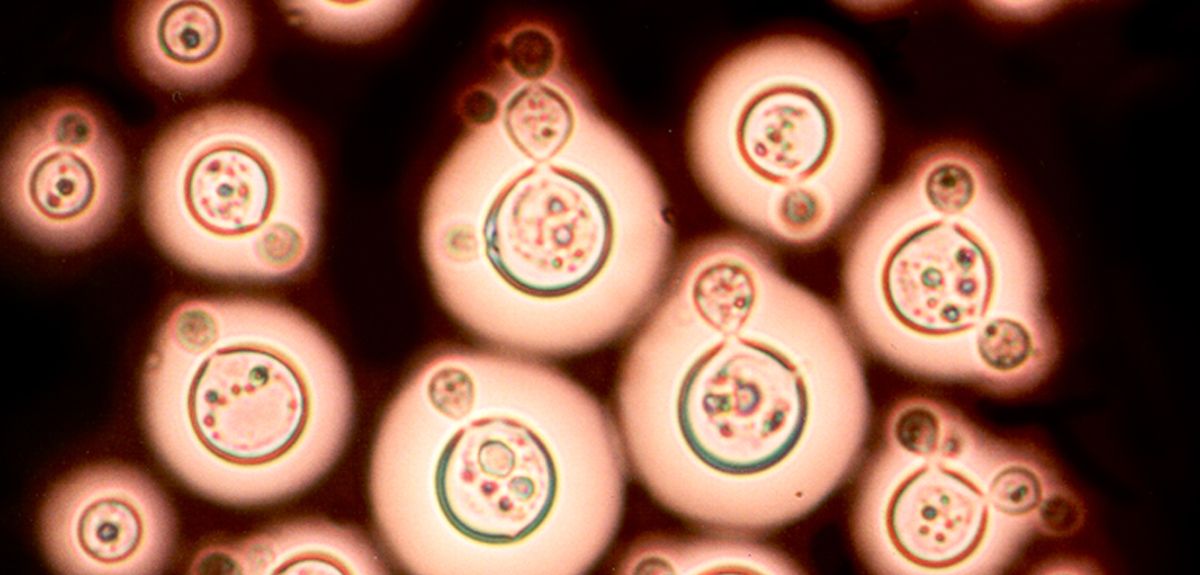

Cryptococcal meningitis is a fungal infection of the brain, which primarily affects people living with HIV. The disease is estimated to cause over 600,000 deaths each year, with the majority of cases occurring in sub-Saharan Africa and Asia.

Dexamethasone is already being used by some doctors as a treatment for cryptococcal meningitis. They are doing that with the best of intentions, believing it will benefit their patients. However, our trial has shown that that is in fact worse than standard treatment.

Dr Justin Beardsley, Oxford University Clinical Research Unit

Recent research has shown the best way to use the available anti-fungal therapies, but even with optimal treatment, between 3 and 7 out of every ten people affected will die in areas where the disease is most prevalent. The Oxford team therefore studied what happened when an extra drug was given alongside anti-fungal treatments.

Dr Justin Beardsley led the study. He explains: 'Given the relatively poor success rate for existing anti-fungals and the fact that there are no new anti-fungal drugs in the pipeline we decided to trial an adjunctive treatment to reduce HIV-associated cryptococcal meningitis' unacceptably high rates of death and disability. An adjunctive treatment is something given to patients to improve the effectiveness of their primary treatment – in this case, the anti-fungal drugs.'

The team selected a steroid called dexamethasone as the adjunctive agent. It had shown promise in observational studies and lab-based animal models and has proven efficacy in some other forms of meningitis. It is also a well-understood drug which hadn't been associated with adverse events when used to treat infectious diseases. Furthermore, despite a lack of evidence, it is already frequently used to treat cryptococcal meningitis.

For the trial, volunteers with cryptococcal meningitis were given either dexamethasone or placebo alongside the standard antifungal therapy. The volunteer patients were in Vietnam, Thailand, Indonesia, Laos, Uganda and Malawi. Each was carefully monitored to see the microbiological and clinical responses to treatment.

The trial was stopped, because the team recognised that outcomes were worse for patients receiving dexamethasone: while they were no more likely to die than those receiving placebo, their infection cleared more slowly, they had more treatment complications and they were less likely to recover without disability.

So is this a failure?

The clinical trial has ended but our work is ongoing.

Dr Justin Beardsley, Oxford University Clinical Research Unit

Dr Beardsley explains that success in a clinical trial is a definitive result. A definite 'yes' is wonderful but a definite 'no' means you can stop researching a treatment that will be ineffective and focus efforts elsewhere. In this case, there is an added and immediate benefit for current patients:

'Dexamethasone is already being used by some doctors as a treatment for cryptococcal meningitis. They are doing that with the best of intentions, believing it will benefit their patients. However, our trial has shown that that is in fact worse than standard treatment. Our evidence will inform those doctors so they know to stop using a potentially harmful treatment.

'It is also important to note that we don't simply record whether something works or not. Samples from patients are analysed and studied, offering huge amounts of data. The clinical trial has ended but our work is ongoing. It should reveal more details about how the immune system responds to cryptococcal meningitis. In turn, those insights may deliver future breakthroughs in the treatment of this deadly and neglected condition.'

It’s worth remembering: Even apparently negative results contribute to positive improvements in healthcare.

Researchers have shed light on a critical paradox of modern medical research – why research is getting more expensive even though the cost and speed of carrying out many elements of studies has fallen hugely. In a paper in journal PLOS ONE, they suggest that improvements in research efficiency are being outweighed by accompanying reductions in effectiveness.

Faster, better, cheaper

Modern DNA sequencing is more than a billion times faster than the original 1970s sequencers. Modern chemistry means a single chemist can create around 800 times more molecules to test as possible treatments as they could in the early 1980s. X-ray crystallography takes around a thousandth of the time it did in the mid-1960s. Almost every research technique is faster or cheaper – or faster and cheaper – than ever.

Added to that are techniques unavailable to previous generations of researchers – like transgenic mice, or computer-based virtual models underpinned by processing power that itself gets more powerful and less expensive every year.

Lower costs yet higher budgets?

So why has the cost of getting a single drug to market doubled around every nine years since 1950? To put that into context, for every million pounds spent on drug research in 1950, in 2010, despite all the advances in speed and all the reductions in individual costs, you needed to spend around £128,000,000 to achieve similar success.

Those figures may explain why research in certain areas has stalled. For example, in the forty years between 1930 and 1970, ten classes of antibiotics were introduced. In the forty-five years since 1970, there have been just two.

Better engine, worse compass

This question is tackled by two researchers in a paper published in journal PLOS ONE, including Dr Jack Scannell, an associate of the joint Oxford University-UCL Centre for the Advancement of Sustainable Medical Innovation (CASMI).

Applying a statistical model, they show that what is critical to the overall cost of research is not the brute force efficiency of particular processes but the accuracy of the models being used to assess whether a particular molecule, process or treatment is likely to work.

Dr Scannell explains: 'The issue is how well a model correlates with reality. So if we are looking for a treatment for condition X and we inject molecule A into someone with it and watch to see what happens, that has high correlation in that person – a value of 1. It also has some insurmountable ethical issues, so we use other models. Those include animal testing, experiments on cells in the lab or computer programmes.

A reduction in correlation of 0.1 could offset a ten-fold increase in speed or cost efficiency.

Dr Jack Scannell, Centre for the Advancement of Sustainable Medical Innovation

'None of those will correlate perfectly, but they all tend to be faster and cheaper. Many of these models have been refined to become much faster and cheaper than when initially conceived.

'However, what matters is their predictive value. What we showed was that a reduction in correlation of 0.1 could offset a ten-fold increase in speed or cost efficiency. Let's say we use a model 0.9 correlated with the human outcome and we get 1 useful drug from every 100 compounds tested. In a model with 0.8 correlation, we'd need to test 1000; a 0.7 correlation needs 10,000; a 0.6 correlation needs 100,000 and so on.

'We could compare that to fitting out a speedboat to look for a small island in a big ocean. You spend lots of time tweaking the engine to make the boat faster and faster. But to do that you get a less and less reliable compass. Once you put to sea, you can race around but you'll be racing in the wrong direction more often than not. In the end, a slower boat with a better compass would get there faster.

'In research we've been concentrating on beefing up the engine but neglecting the compass.'

He adds that beyond the straightforward economics of drug discovery, the use of less valid models may also explain why it is getting harder to reproduce published scientific results – the so-called reproducibility crisis. Models with lower predictive values are less likely to reliably generate the same results.

Retiring the super models

There are two possible explanations for this decline in the standard of models.

When the medical problem is solved, the commercial drive is no longer there for drug companies and the scientific-curiosity drive is no longer there for academic researchers.

Dr Jack Scannell, Centre for the Advancement of Sustainable Medical Innovation

Firstly, good models get results. There is no point finding lots of cures for the same disease, so the best models are retired. If you’ve invented the drugs that beat condition X, you don’t need a model to test possible drugs to treat condition X anymore.

Dr Scannell explains: 'An example would be the use of dogs to test stomach acid drugs. That was a model with high correlation and so they found cures for the condition. We now have those drugs. You can buy them over the counter at the chemist, so we don't need the dog model. When the medical problem is solved, the commercial drive is no longer there for drug companies and the scientific-curiosity drive is no longer there for academic researchers.'

The reverse of this is that less accurately predictive models remain in use because they have not yielded a cure, and scientists keep using them for want of anything better.

Secondly, modern models tend to be further removed from living people. Testing chemicals against a single protein in a petri dish can be extremely efficient but, Scannell suggests, the correlation is lower: 'You can screen lots of chemicals at very low cost. However, they fail because their validity is often low. It doesn't matter that you can screen 1,000,000 drug candidates – you've got a huge engine but a terrible compass.'

He cautions that without further research it is not clear how much the issue is caused by having 'used up' the best predictive models or how much it is a case of choosing to use poor predictive models.

Re-aligning the compass

Is it not peculiar that the first useful antibiotic, the sulphanilamide drug prontosil was discovered by Gerhard Domagk in the 1930s from a small screen of available [compounds] (probably no more than several hundred), whereas screens of the current libraries, which include ~10,000,000 compounds overall, have produced nothing at all?

At one level, it is easy to see why efficiency has been prioritised over validity. Activity is easy to measure, simple to report and seductive to present. It is easy to show that you have doubled the number of compounds tested and it sounds like that will get results faster.

But Dr Scannell and his co-author Dr Jim Bosley say that instead we should invest in improving the models we use. They highlight the focus on model quality in environmental science, where the controversy around anthropomorphic global warning has pushed scientists to explain and justify the models they use. They suggest that this approach – known as 'data pedigree' – could be applied in health research as well, suggesting that it should be part of grant-makers' decision processes. That would ensure that researchers would try to fine-tune their compass as much as their engine.

Dr Scannell concludes: 'If we are to harness the power of the incredible efficiency improvements we have seen, we must also be better at directing all that brute-force power. Better processes must be allied to better models in order to generate better results. We are living with the alternative – a high-speed ride that, all too often, goes nowhere fast.'

Many of us know the feeling of standing in front of a subway map in a strange city, baffled by the multi-coloured web staring back at us and seemingly unable to plot a route from point A to point B.

Now, a team of physicists and mathematicians has attempted to quantify this confusion and find out whether there is a point at which navigating a route through a complex urban transport system exceeds our cognitive limits.

After analysing the world's 15 largest metropolitan transport networks, the researchers estimated that the information limit for planning a trip is around 8 bits. (A 'bit' is a binary digit – the most basic unit of information.)

Additionally, similar to the 'Dunbar number', which estimates a limit to the size of an individual's friendship circle, this cognitive limit for transportation suggests that maps should not consist of more than 250 connection points to be easily readable.

Using journeys with exactly two connections as their basis (that is, visiting four stations in total), the researchers found that navigating transport networks in major cities – including London – can come perilously close to exceeding humans' cognitive powers.

And when further interchanges or other modes of transport – such as buses or trams – are added to the mix, the complexity of networks can rise well above the 8-bit threshold. The researchers demonstrated this using the multi-modal transportation networks from New York City, Tokyo, and Paris.

Mason Porter, Professor of Nonlinear and Complex Systems in the Mathematical Institute at the University of Oxford, said: 'Human cognitive capacity is limited, and cities and their transportation networks have grown to the point where they have reached a level of complexity that is beyond human processing capability to navigate around them. In particular, the search for a simplest path becomes inefficient when multiple modes of transport are involved and when a transportation system has too many interconnections.'

Professor Porter added: 'There are so many distractions on these transport maps that it becomes like a game of Where's Waldo?

'Put simply, the maps we currently have need to be rethought and redesigned in many cases. Journey-planner apps of course help, but the maps themselves need to be redesigned.

'We hope that our paper will encourage more experimental investigations on cognitive limits in navigation in cities.'

The research – a collaboration between the University of Oxford, Institut de Physique Théorique at CEA-Saclay, and Centre d'Analyse et de Mathématique Sociales at EHESS Paris – is published in the journal Science Advances.

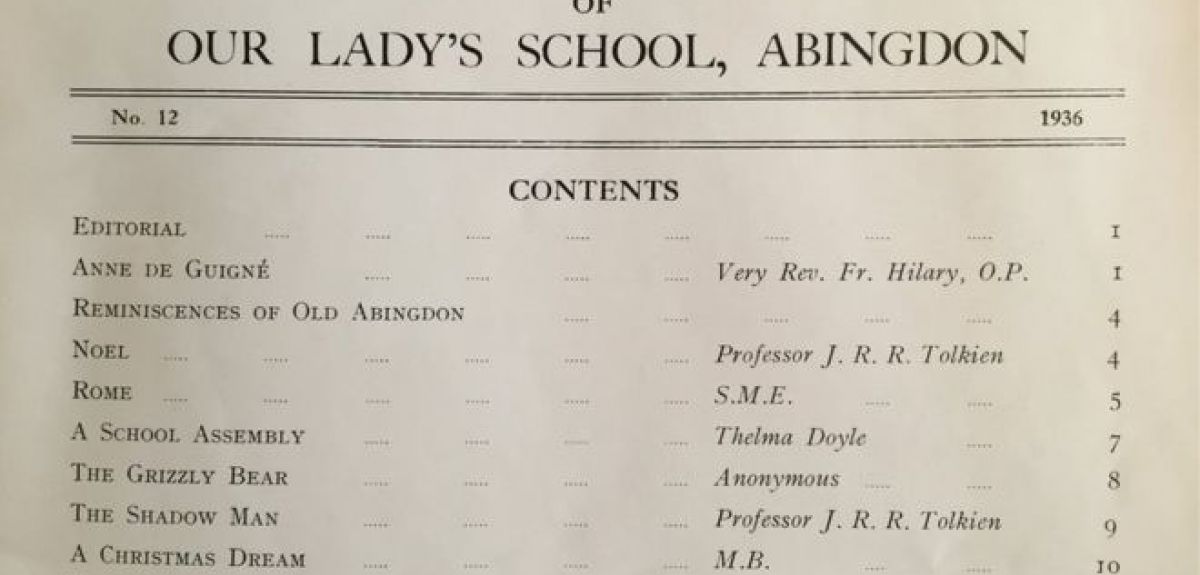

Two poems by author JRR Tolkien have been discovered in a 1936 copy of an Oxfordshire school's annual.

It is believed that Tolkien came to know Our Lady’s School in Abingdon while he was a professor of Anglo-Saxon at Oxford University.

Dr Stuart Lee, a Tolkien expert in Oxford University's English Faculty, says the poems do not add a lot to our understanding of Tolkien but that "it is always fascinating to see previously unknown material".

'Tolkien is mainly known as a prose writer through his novels but he also wrote quite a bit of poetry some of which has been published,' he says.

'These two poems are additions, therefore, to a growing area of scholarship around his verse.'

The two poems, published under the name of 'Professor J.R.R. Tolkien', are called The Shadow Man and Noel, the latter of which is a Christmas poem.

'Of the poems Noel is a clear celebration of his Christianity/Catholicism,' explains Dr Lee. 'It shows the transformation of dark to light with the coming of Christ.

'It is still a very Tolkien poem though with archaic/classical imagery (sword/sheath, o’er … dale).

'The Shadow Man is more interesting in some ways as it is like some of his other poems. It suggests a folk-tale origin, but is elusive in its exact provenance, and also quite dark and sinister.'

A year later in 1937, Tolkien's first literary success The Hobbit was published. Dr Lee says The Shadow Man remind him of the poems contained in the Middle Earth books.

'Tolkien wrote a few poems like this which almost feel like the type of poem someone would recite in The Hobbit and The Lord of the Rings,' he says.

His association with Our Lady's School is also interesting, says Dr Lee, because it shows a prominent professor at Oxford taking the time to visit and support a school.

'It shows how generous Tolkien was,' says Dr Lee. 'He is noted as doing a lot of talks for local schools, and this is yet another example (albeit early) of Oxford’s outreach approach!'

It has been known for some time that plant roots can communicate with plant shoots. Now, a new paper from Oxford researchers (working in collaboration with researchers from the Chinese Academy of Sciences in Beijing) tells us how.

Professor Nick Harberd, Sibthorpian Professor of Plant Sciences at Oxford, spoke to Science Blog about the work.

'The fact that plant roots communicate with plant shoots is in many ways not surprising. Plant shoots incorporate carbon (as CO2) from the air, while plant roots extract mineral nutrients (for example, nitrate or phosphate) from the soil. Coordination of these activities is likely to be selectively advantageous, because it enables the whole plant to optimize its metabolism and growth. But the mechanism of communication has, until recently, been relatively unknown.

'Our paper shows that communication is achieved via movement of an agent from shoot to root. This agent is a protein known as HY5, a kind of protein known as a "transcription factor" that can activate, or "switch on", genes. HY5 was already known to control rates of photosynthesis (CO2 capture) in the shoot. Our work shows that HY5 acts as an agent of communication between shoot and root by moving through the phloem vessels (part of the plant vascular system).

'HY5 travels from shoot to root, and when it reaches the root it activates a number of genes in root cells, including those genes that encode the nitrate transporters that extract nitrate from the soil. This activation is also dependent upon sugars (a measure of CO2 capture) that also travel through the phloem from shoot to root. Thus, movement of HY5 and sugars from shoot to root increases nitrate uptake by the root.

'Our use of genetics in this research has enabled us to discover things previously unknown. We screened for mutants that had reduced shoot-root communication. The logic here is that the mutants would lack genes that controlled shoot-root communication, thus allowing us to identify the genes which, in normal plants, control that communication. One of our mutants identified a gene encoding the previously well-characterised HY5 protein. What we discovered using this technique was that HY5 moves from shoot to root, something new that hadn’t previously been known to be a property of HY5.

'In terms of fundamental science, this new knowledge significantly advances our understanding of how plant shoots and roots communicate with one another, and especially how that communication coordinates whole-plant growth and metabolism. This is just the beginning of what is likely to become a major new area in fundamental plant biology.

'In terms of application, HY5 was first identified as a protein that regulates the growth of plants in response to light signals. Most crops (for example, wheat, rice or maize) are grown in relatively dense plantings in which individual plants tend to shade one another. Our findings mean that HY5 can now become a target for breeders to increase HY5 activity in the roots of shaded crop plants, thus improving uptake of nitrate from the soil.

'This is a major objective for plant breeders worldwide as it will increase the efficiency with which crops use fertilizer, reduce the damaging ecological impacts of fertilizer run-off from fields, and in general contribute to the environmentally sustainable increases in crop yields that we need to feed the growing world population.'

The paper 'Shoot-to-Root Mobile Transcription Factor HY5 Coordinates Plant Carbon and Nitrogen Acquisition' is published in Current Biology.

- ‹ previous

- 131 of 252

- next ›

Learning for peace: global governance education at Oxford

Learning for peace: global governance education at Oxford  What US intervention could mean for displaced Venezuelans

What US intervention could mean for displaced Venezuelans  10 years on: The Oxford learning centre making an impact

10 years on: The Oxford learning centre making an impact Oxford and The Brilliant Club: inspiring the next generation of scholars

Oxford and The Brilliant Club: inspiring the next generation of scholars New course launched for the next generation of creative translators

New course launched for the next generation of creative translators The art of translation – raising the profile of languages in schools

The art of translation – raising the profile of languages in schools  Tracking resistance: Mapping the spread of drug-resistant malaria

Tracking resistance: Mapping the spread of drug-resistant malaria