How Oxford and Google DeepMind are making artificial intelligence safer

Oxford academics are teaming up with Google DeepMind to make artificial intelligence safer.

Laurent Orseau, of Google DeepMind, and Stuart Armstrong, the Alexander Tamas Fellow in Artificial Intelligence and Machine Learning at the Future of Humanity Institute at the University of Oxford, will be presenting their research on reinforcement learning agent interruptibility at the Uncertainty in Artificial Intelligence conference in New York City later this month.

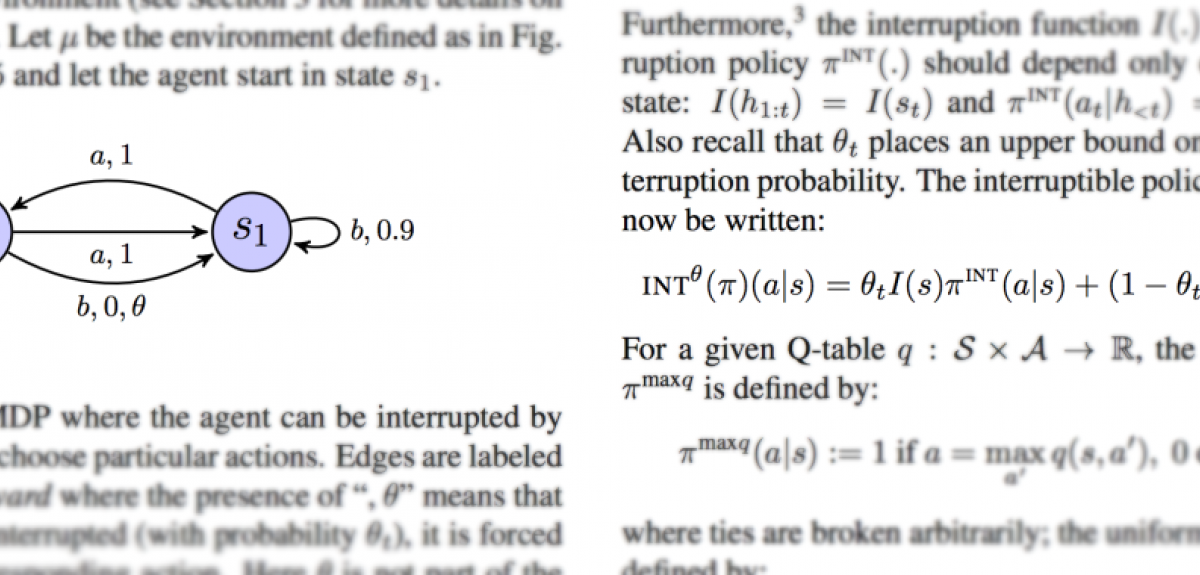

Orseau and Armstrong's research explores a method to ensure that reinforcement learning agents can be repeatedly safely interrupted by human or automatic overseers. This ensures that the agents do not “learn” about these interruptions, and do not take steps to avoid or manipulate the interruptions.

The researchers say: 'Safe interruptibility can be useful to take control of a robot that is misbehaving… take it out of a delicate situation, or even to temporarily use it to achieve a task it did not learn to perform.'

Laurent Orseau of Google DeepMind says: 'This collaboration is one of the first steps toward AI Safety research, and there's no doubt FHI and Google DeepMind will work again together to make AI safer.'

A more detailed story can be found on the FHI website and the full paper can be downloaded here.